2.0 KiB

2.0 KiB

ollama-intel-arc

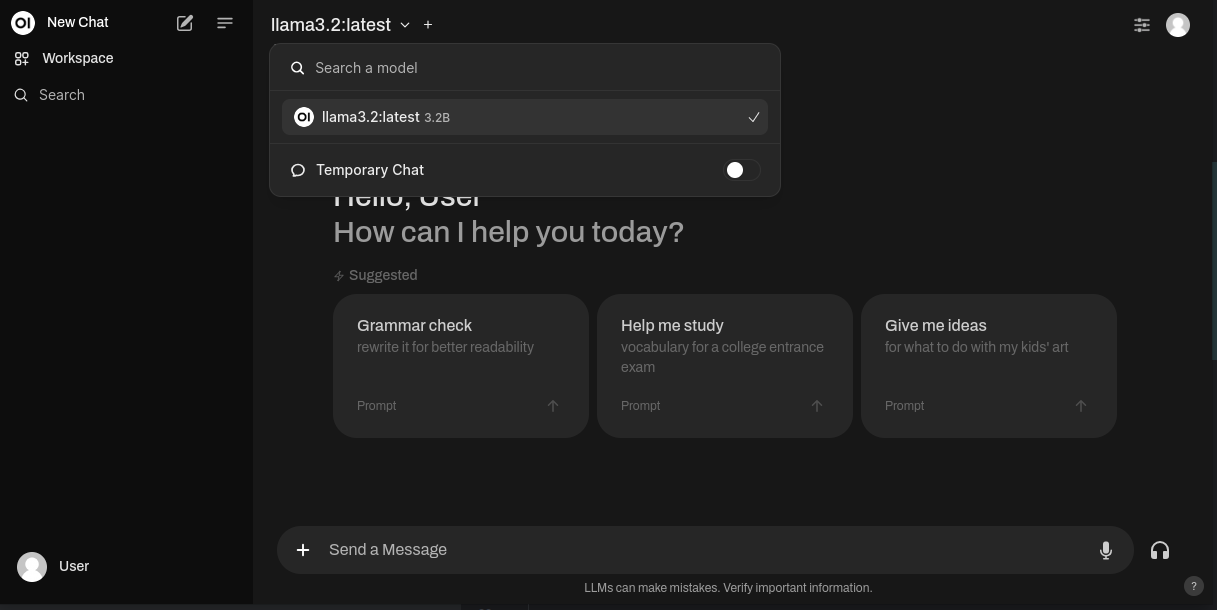

A Docker-based setup for running Ollama as a backend and Open WebUI as a frontend, leveraging Intel Arc Series GPUs on Linux systems.

Overview

This repository provides a convenient way to run Ollama as a backend and Open WebUI as a frontend, allowing you to interact with Large Language Models (LLM) using an Intel Arc Series GPU on your Linux system.

Services

-

Ollama

- Runs llama.cpp and Ollama with IPEX-LLM on your Linux computer with Intel GPU.

- Built following the guidelines from Intel.

- Uses Ubuntu 24.04 LTS, Ubuntu's latest stable version, as a container.

- Uses the latest versions of required packages, prioritizing cutting-edge features over stability.

- Exposes port

11434for connecting other tools to your Ollama service. - To validate this setup, run:

curl http://localhost:11434/

-

Open WebUI

- The official distribution of Open WebUI.

WEBUI_AUTHis turned off for authentication-free usage.ENABLE_OPENAI_APIand ENABLE_OLLAMA_API flags are set to off and on, respectively, allowing interactions via Ollama only.

Setup

Fedora

$ git clone https://github.com/eleiton/ollama-intel-arc.git

$ cd ollama-intel-arc

$ podman compose up

Others (Ubuntu 24.04 or newer)

$ git clone https://github.com/eleiton/ollama-intel-arc.git

$ cd ollama-intel-arc

$ docker compose up

Usage

- Run the services using the setup instructions above.

- Open your web browser to http://localhost:3000 to access the Open WebUI web page.

- For more information on using Open WebUI, refer to the official documentation at https://docs.openwebui.com/ .